Math fascinates me?much more now than it did in school. I wish I had more interest in advanced math while I was in school because I feel like I would use it in my job. Part of the problem is the way that mathematics is taught, and for that I place part of the blame on my teachers during my formative years. Nobody cares about when a couple of trains would intersect. If the material was related to things that interested me at the time, I would definitely have enjoyed it more.

I was reading an article from Harvard Business Review about the average cost overruns in large IT projects, and there was a figure thrown out about the average dollar amount by which these projects overrun. This figure suffers from the same problem that Larry Ponemon?s average data breach record cost figure does; it doesn?t relate to me! The authors of the article go on to give an average percentage by which projects overrun, which is much more applicable to me than a dollar amount, but still leaves questions about the validity of that number to me. Posting an average without a Standard Deviation is sensationalism at best, irresponsible at the worst.

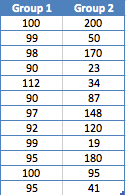

Let me illustrate this with some real numbers. Two different data sets with the exact same average that tell quite different stories.

Sample Data Sets

Group 2 has a much wider spread of data than Group 1, but both have they exact same average: 97.25. If you have a much larger data set whereby a quick glance couldn?t tell you the values are very different, you could calculate the standard deviation of the data set which is defined as a measure of how much variation there is in the values around the average number or rate. The standard deviation of Group 1 is 5.9, and Group 2 is 65.3. By definition, 68.2% of the data is one standard deviation, or 6 digits, is between the values of 94 and 99. 95.4% of the data is contained within a 12 digits range, or between 91 and 102, and 99.6% of the data is contained in an 18 digit range. 68% of Group 2?s numbers are between 64 and 130, a much larger range. What this means is that Group 1?s average is much more representative of the data provided, and Group 2?s average is not.

How does this apply to us? Let?s say that the data sets are calculations of how much money companies paid per record to clean up a breach. With a small standard deviation like in Group 1, you could take the calculation of $97 per record and maybe make some decisions based on that number. With group 2, $97 is not a good measure as the highest number was 200, and the lowest 19. So what can we do to still use average dollar amounts but make them more relevant?

I propose that the data sets are broken into like groups where the standard deviation is low to give people more context to the data?s results. In Larry?s case, maybe he should break it into subgroups defined by the company?s size, industry, volume, market cap, or breach size, and avoid releasing figures that report averages on the entire data set without a standard deviation.

So the next time you see an average dollar amount associated with some population of data, do yourself a favor and dig into the numbers a bit more. If you understand the spread of information, you will be able to understand how much you can value the summary. Standard Deviation is one of many measures you can use, but it?s one that is easy to understand and is built into nearly every spreadsheet program available.

Possibly Related Posts:

If you're new here, you may want to subscribe to my RSS feed. For information on my book and other publications, click here.

Tags: math

Source: https://www.brandenwilliams.com/blog/2011/09/01/more-problems-with-averages/

amex amex canoe arundhati roy arundhati roy brody jenner brody jenner

No comments:

Post a Comment